LLMs via Open OnDemand

Description

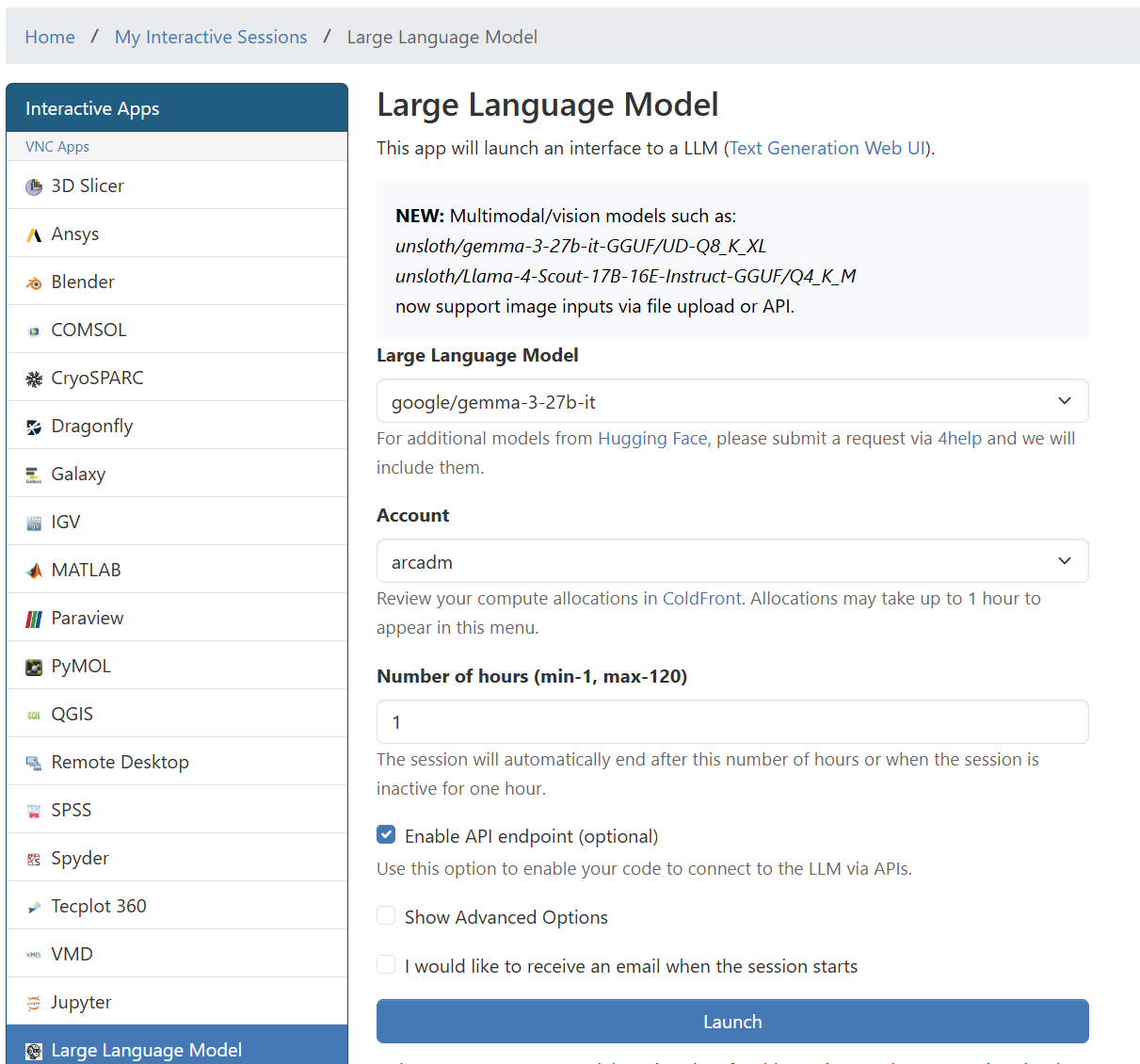

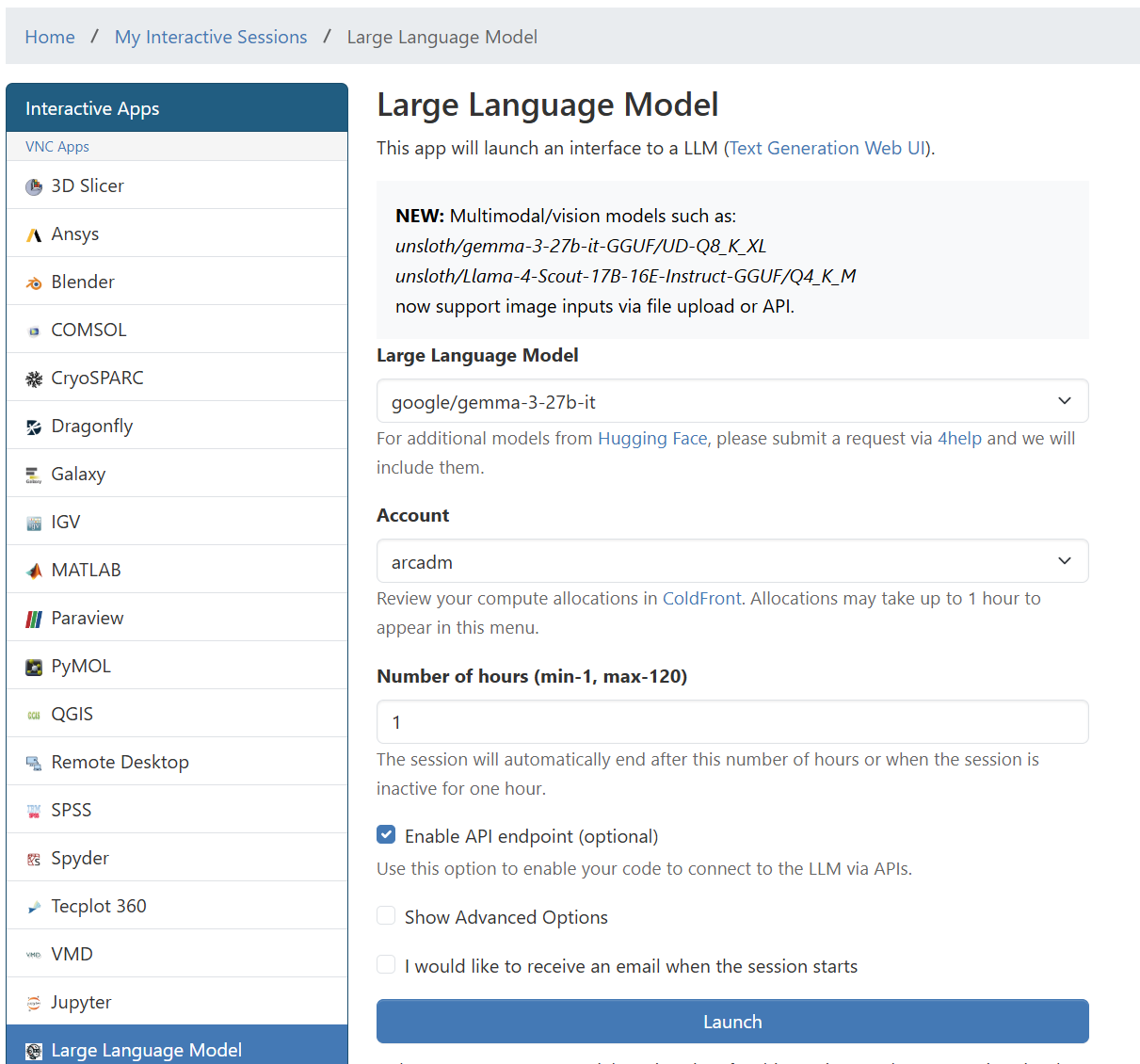

https://ood.arc.vt.edu offers a custom dedicated LLM via Open OnDemand. Users can employ this tool for running massive queries on a dedicated instance without request limits. It is based on text-generation-webui. It offers web and API access using the OpenAI API. Users can select their preferred model from a list of models publicly available on Hugging Face.

Access

All Virginia Tech students, faculty, and staff with an ARC account may access the service at https://ood.arc.vt.edu. If you don’t have an ARC account, you can create an account and follow the steps in the getting started guide to get an allocation.

Running LLMs via Open OnDemand will consume service units from the user’s allocation.

Access is enabled through the VT network (on-campus) or VPN (off-campus).

A unique API key per session will be generated. Do not share your keys with others.

Restrictions

Data classification restriction. Researchers can use this tool for high-risk data. The service is approved by VT ITSO for processing sensitive or regulated data unless. However, researchers are reminded to consult with VT Privacy and Research Data Protection Program regarding the storage and analysis of high-risk data to comply with all exiting regulations.

Each session is limited to a maximum of 5 days.

Sessions will end automatically if there is no activity detected in the last hour.

There are no limits in the requests per minute/hour since the session is dedicated to the user in exclusive mode.

Models

ARC currently supports 40+ commonly-used models publicly available on Hugging Face. These models are also available on the clusters at /common/data/models/. If you want us to download and deploy additional models, please submit a request via 4help. Users are required to accept the terms and conditions of the models using their Hugging Face account.

Security

This service is hosted entirely on-premises within the ARC infrastructure. No data is sent to any third party outside of the university. All user interactions are logged and preserved in compliance with VT IT Security Office Data Protection policies.

Disclaimer

LLMs may produce inaccurate, misleading, biased, or harmful information. Use of this service is undertaken entirely at the user’s own discretion and risk. The service is provided “as is”, and, to the fullest extent permitted by applicable law, ARC and VT expressly disclaim all warranties, whether express or implied, as well as any liability for damages, losses, or adverse consequences that may result from the use of, or reliance upon, the outputs generated by the models. By using this service, the user acknowledges and accepts these conditions, and agrees to comply with all applicable terms and conditions governing the use of the hosted models, associated software, and underlying platforms.